Visualization

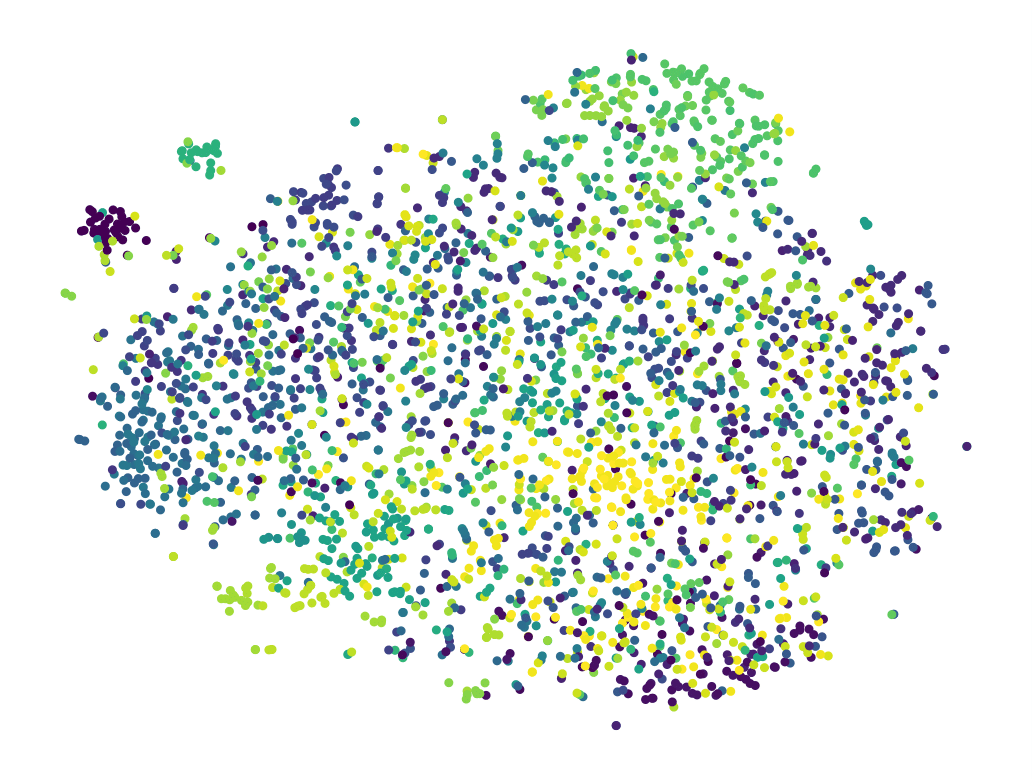

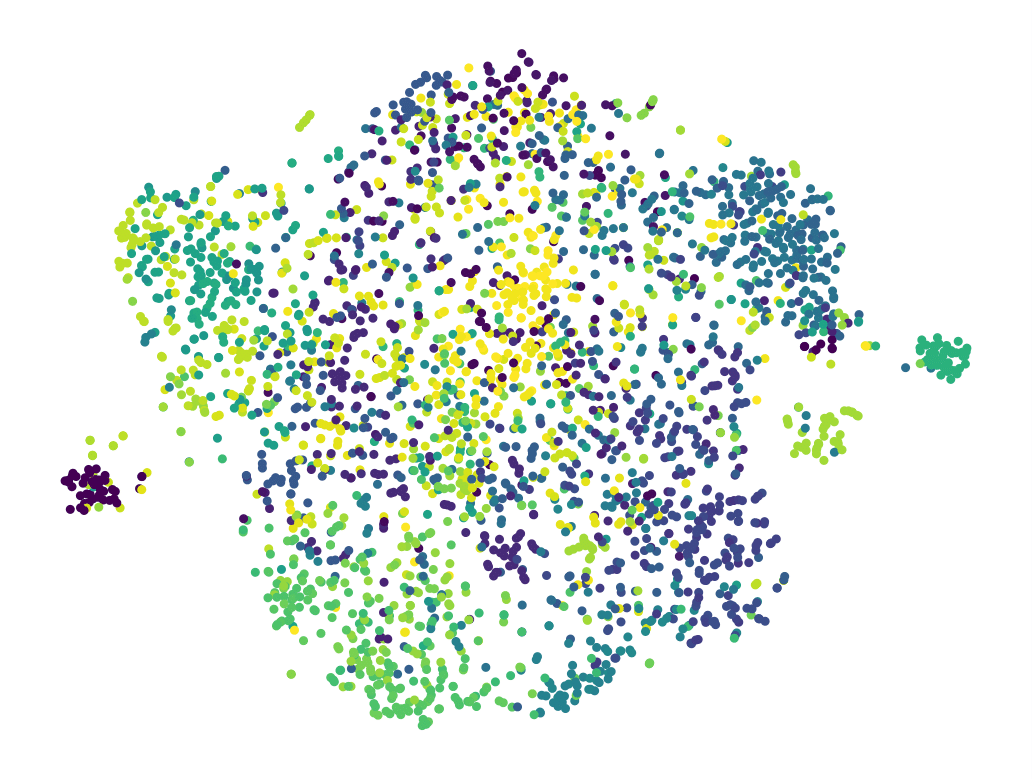

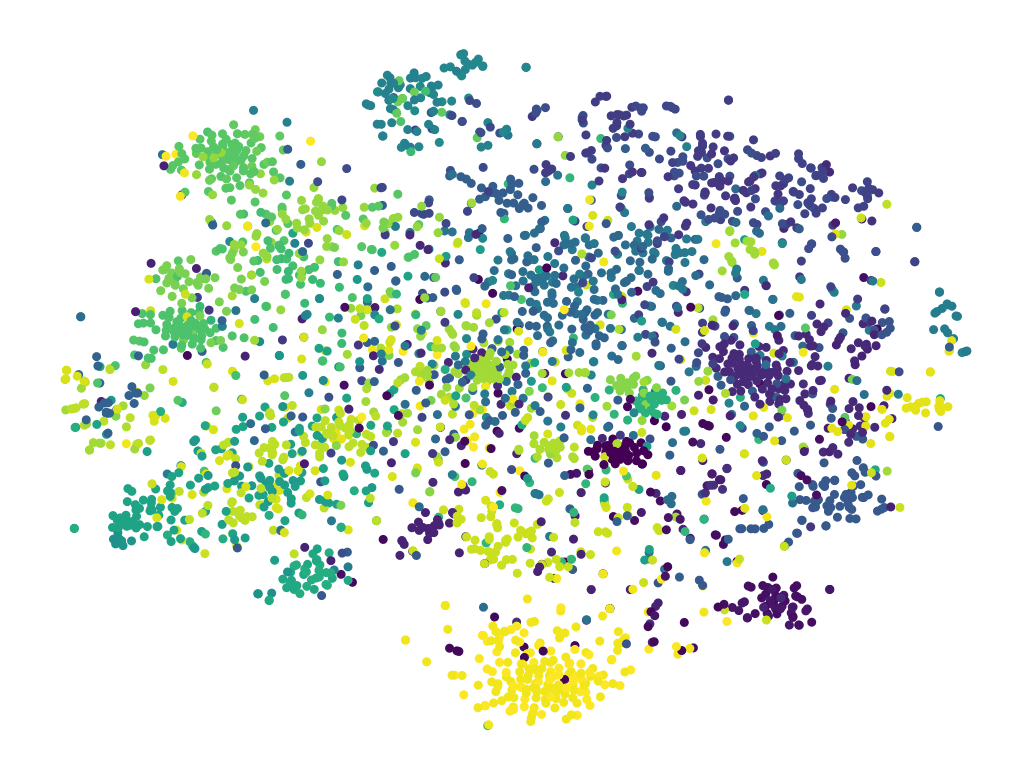

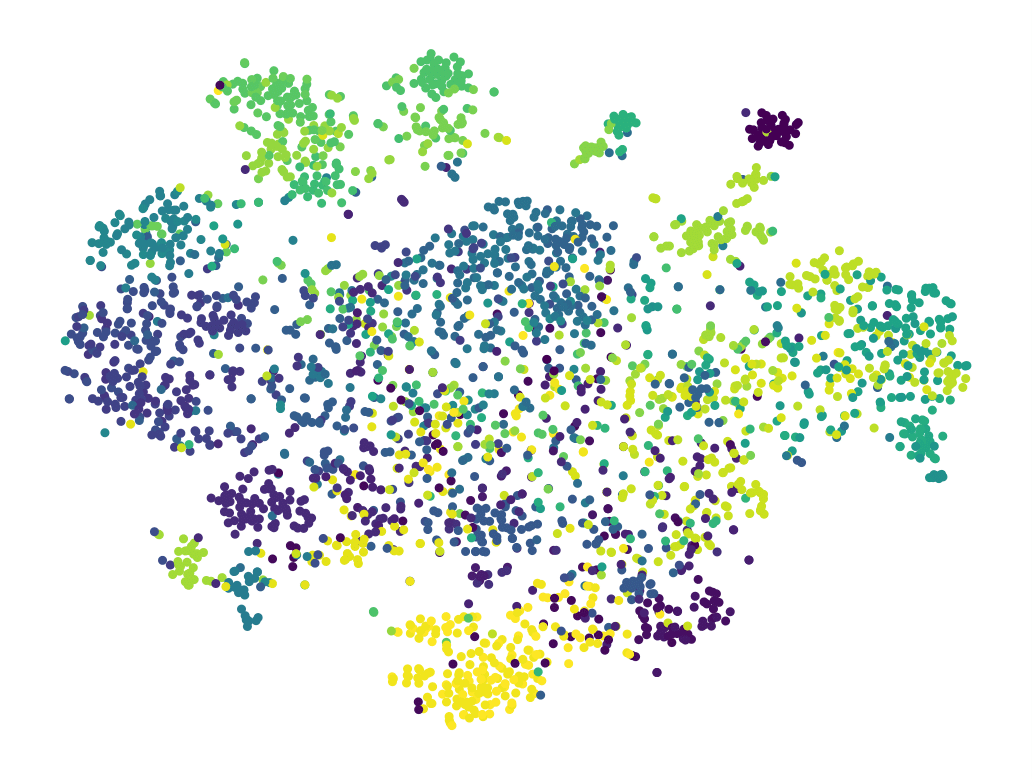

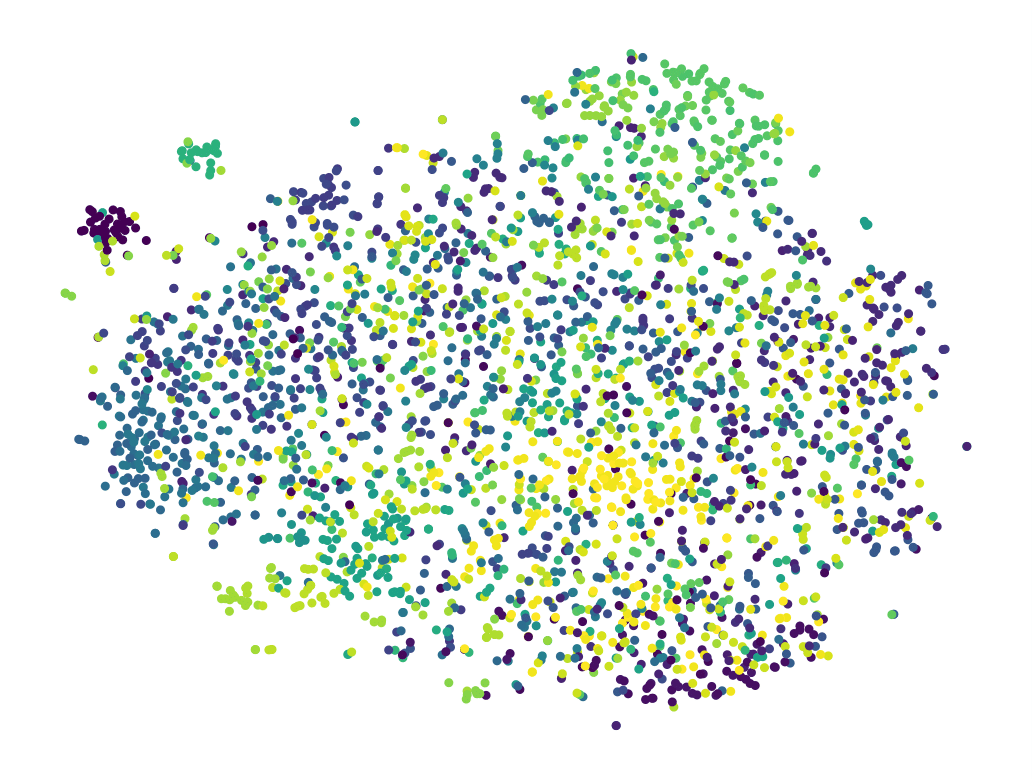

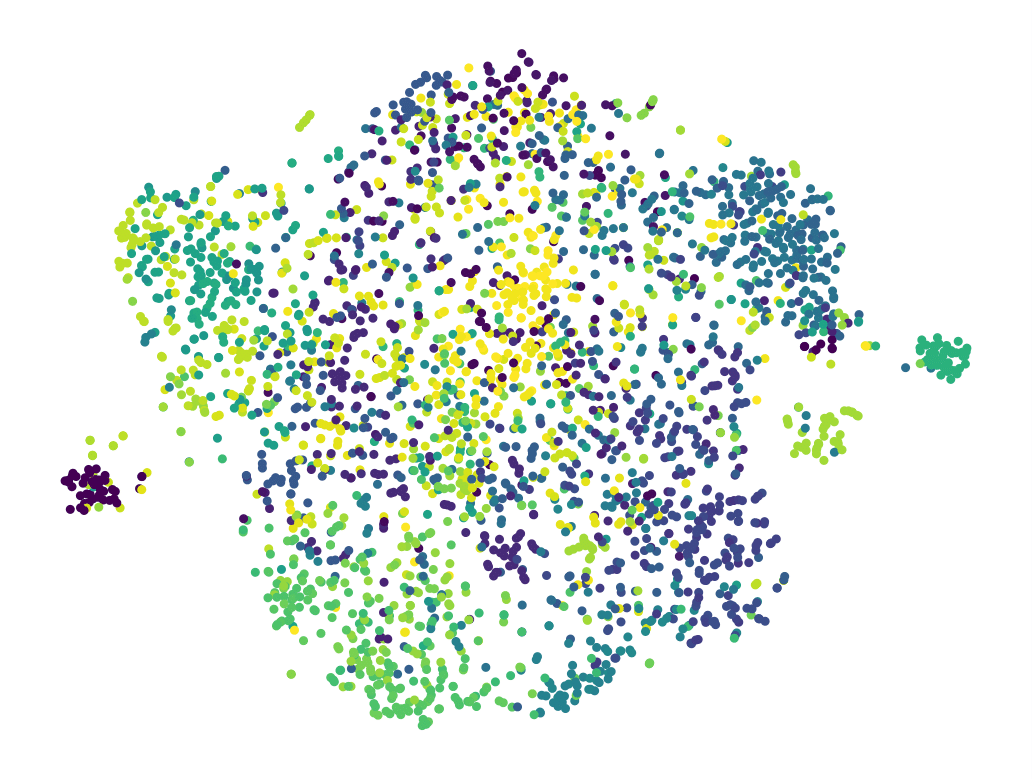

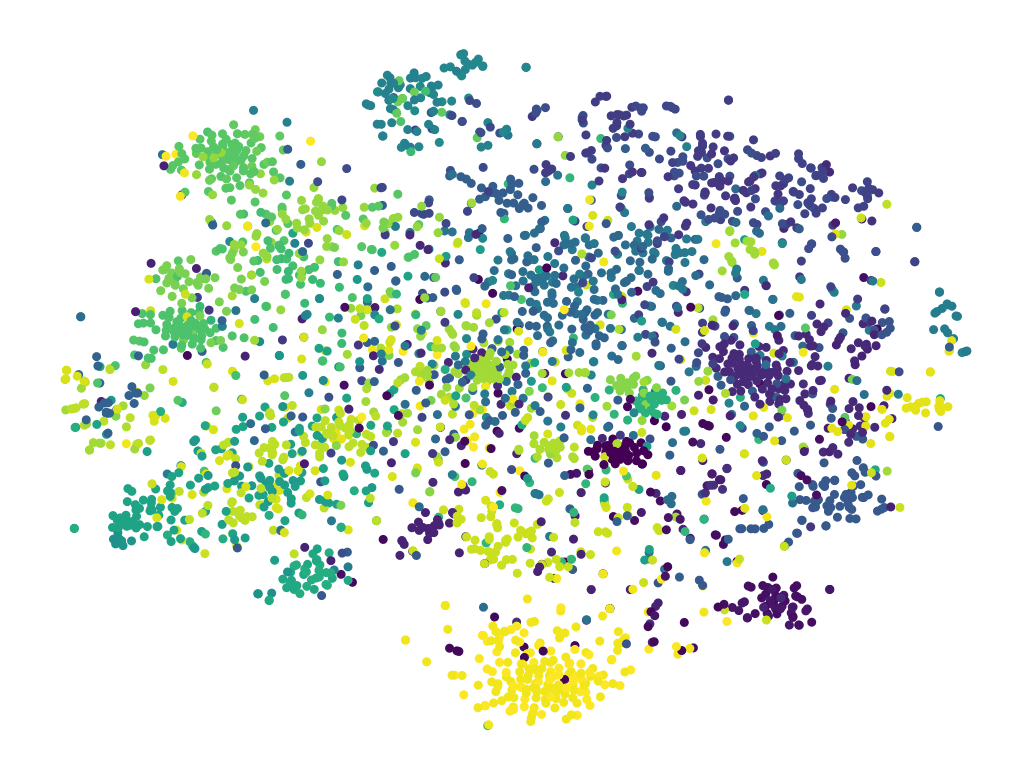

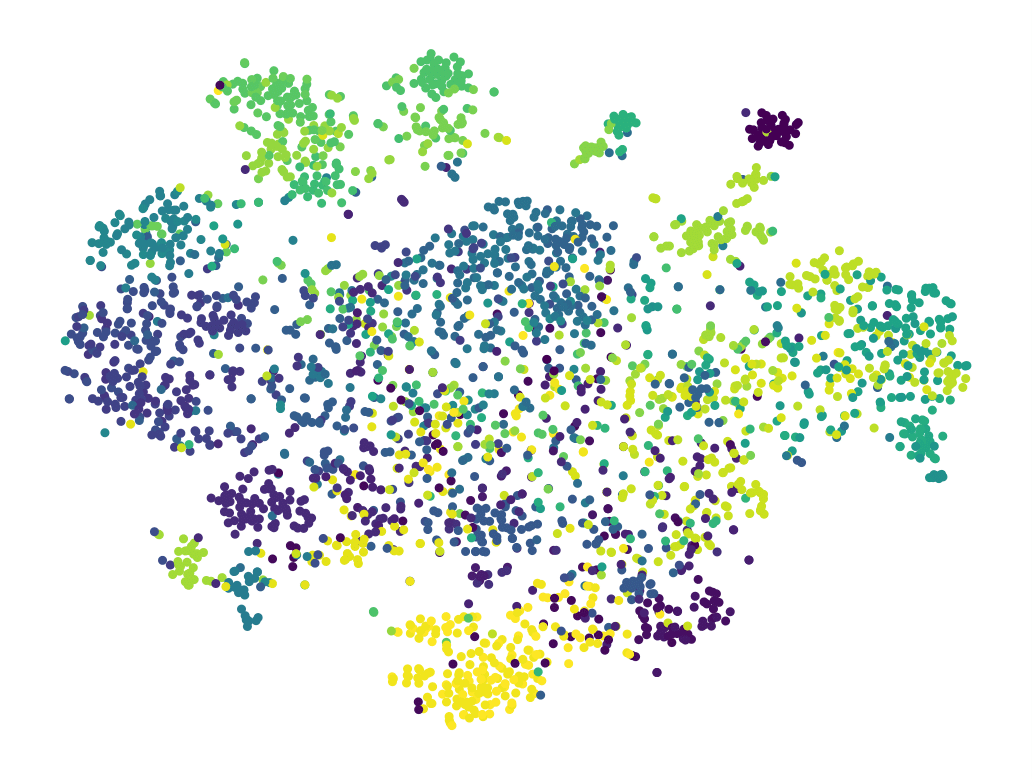

Figure 1. t-SNE visualizations on SSv2 val dataset. The top-1 accuracy is reported in red.

Although the pre-trained Vision Transformers (ViTs) achieved great success in computer vision, adapting a ViT to various image and video tasks is challenging because of its heavy computation and storage burdens, where each model needs to be independently and comprehensively fine-tuned to different tasks, limiting its transferability in different domains.

To address this challenge, we propose an effective adaptation approach for Transformer, namely AdaptFormer, which can adapt the pre-trained ViTs into many different image and video tasks efficiently. It possesses several benefits more appealing than prior arts.

Firstly, AdaptFormer introduces lightweight modules that only adds less than 2% extra parameters to a ViT, while it is able to increase the ViT’s transferability without updating its original pre-trained parameters, significantly outperforming the existing 100% fully fine-tuned models on action recognition benchmarks. Secondly, it can be plug-andplay in different Transformers and scalable to many visual tasks. Thirdly, extensive experiments on five image and video datasets show that AdaptFormer largely improves ViTs in the target domains. For example, when updating just 1.5% extra parameters, it achieves about 10% and 19% relative improvement compared to the fully fine-tuned models on Something-Something v2 and HMDB51, respectively.

Figure 1. t-SNE visualizations on SSv2 val dataset. The top-1 accuracy is reported in red.

@article{chen2022adaptformer,

title={AdaptFormer: Adapting Vision Transformers for Scalable Visual Recognition},

author={Chen, Shoufa and Ge, Chongjian and Tong, Zhan and Wang, Jiangliu and Song, Yibing and Wang, Jue and Luo, Ping},

journal={arXiv preprint arXiv:2205.13535},

year={2022}

}